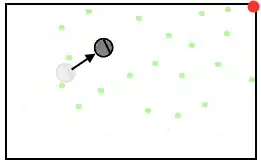

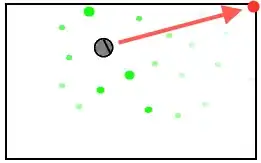

I am hacking a vacuum cleaner robot to control it with a microcontroller (Arduino). I want to make it more efficient when cleaning a room. For now, it just go straight and turn when it hits something.

But I have trouble finding the best algorithm or method to use to know its position in the room. I am looking for an idea that stays cheap (less than $100) and not to complex (one that don't require a PhD thesis in computer vision). I can add some discrete markers in the room if necessary.

Right now, my robot has:

- One webcam

- Three proximity sensors (around 1 meter range)

- Compass (no used for now)

- Wi-Fi

- Its speed can vary if the battery is full or nearly empty

- A netbook Eee PC is embedded on the robot

Do you have any idea for doing this? Does any standard method exist for these kind of problems?

Note: if this question belongs on another website, please move it, I couldn't find a better place than Stack Overflow.