Is there a simple solution to trim whitespace on the image in PIL?

ImageMagick has easy support for it in the following way:

convert test.jpeg -fuzz 7% -trim test_trimmed.jpeg

I found a solution for PIL:

from PIL import Image, ImageChops

def trim(im, border):

bg = Image.new(im.mode, im.size, border)

diff = ImageChops.difference(im, bg)

bbox = diff.getbbox()

if bbox:

return im.crop(bbox)

But this solution has disadvantages:

- I need to define

bordercolor, it is not a big deal for me, my images has a white background - And the most disadvantage, This PIL solution doesn't support ImageMagick's

-fuzzkey. To add some fuzzy cropping. as I can have some jpeg compression artifacts and unneeded huge shadows.

Maybe PIL has some built-in functions for it? Or there is some fast solution?

Cropped:

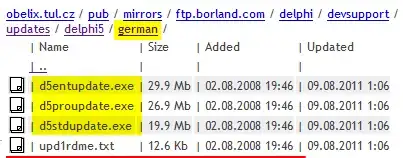

Cropped:

Cropped:

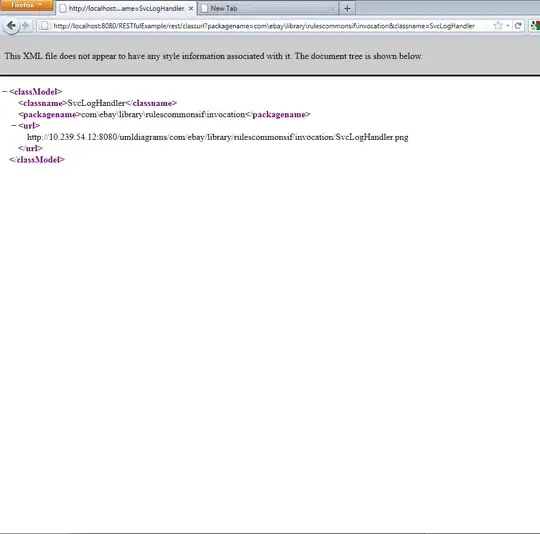

Cropped: