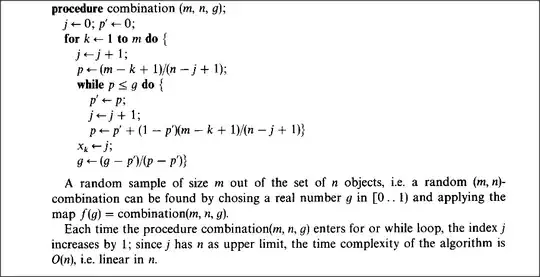

The other methods will work only if the content of the rectangle is in the rotated image after rotation and will fail badly in other situations. What if some of the part are lost? See an example below:

If you are to crop the rotated rectangle text area using the above method,

import cv2

import numpy as np

def main():

img = cv2.imread("big_vertical_text.jpg")

cnt = np.array([

[[64, 49]],

[[122, 11]],

[[391, 326]],

[[308, 373]]

])

print("shape of cnt: {}".format(cnt.shape))

rect = cv2.minAreaRect(cnt)

print("rect: {}".format(rect))

box = cv2.boxPoints(rect)

box = np.int0(box)

print("bounding box: {}".format(box))

cv2.drawContours(img, [box], 0, (0, 0, 255), 2)

img_crop, img_rot = crop_rect(img, rect)

print("size of original img: {}".format(img.shape))

print("size of rotated img: {}".format(img_rot.shape))

print("size of cropped img: {}".format(img_crop.shape))

new_size = (int(img_rot.shape[1]/2), int(img_rot.shape[0]/2))

img_rot_resized = cv2.resize(img_rot, new_size)

new_size = (int(img.shape[1]/2)), int(img.shape[0]/2)

img_resized = cv2.resize(img, new_size)

cv2.imshow("original contour", img_resized)

cv2.imshow("rotated image", img_rot_resized)

cv2.imshow("cropped_box", img_crop)

# cv2.imwrite("crop_img1.jpg", img_crop)

cv2.waitKey(0)

def crop_rect(img, rect):

# get the parameter of the small rectangle

center = rect[0]

size = rect[1]

angle = rect[2]

center, size = tuple(map(int, center)), tuple(map(int, size))

# get row and col num in img

height, width = img.shape[0], img.shape[1]

print("width: {}, height: {}".format(width, height))

M = cv2.getRotationMatrix2D(center, angle, 1)

img_rot = cv2.warpAffine(img, M, (width, height))

img_crop = cv2.getRectSubPix(img_rot, size, center)

return img_crop, img_rot

if __name__ == "__main__":

main()

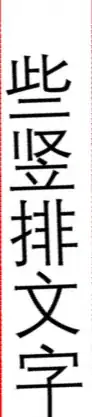

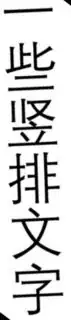

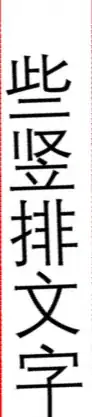

This is what you will get:

Apparently, some of the parts are cut out! Why do not directly warp the rotated rectangle since we can get its four corner points with cv.boxPoints() method?

import cv2

import numpy as np

def main():

img = cv2.imread("big_vertical_text.jpg")

cnt = np.array([

[[64, 49]],

[[122, 11]],

[[391, 326]],

[[308, 373]]

])

print("shape of cnt: {}".format(cnt.shape))

rect = cv2.minAreaRect(cnt)

print("rect: {}".format(rect))

box = cv2.boxPoints(rect)

box = np.int0(box)

width = int(rect[1][0])

height = int(rect[1][1])

src_pts = box.astype("float32")

dst_pts = np.array([[0, height-1],

[0, 0],

[width-1, 0],

[width-1, height-1]], dtype="float32")

M = cv2.getPerspectiveTransform(src_pts, dst_pts)

warped = cv2.warpPerspective(img, M, (width, height))

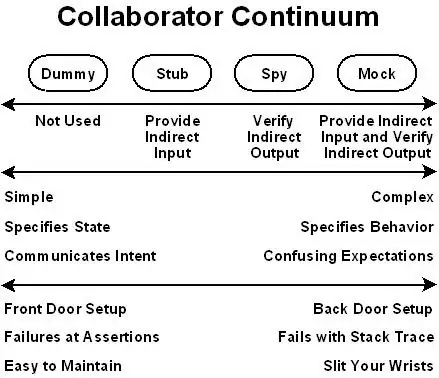

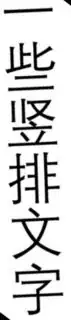

Now the cropped image becomes

Much better, isn't it? If you check carefully, you will notice that there are some black area in the cropped image. That is because a small part of the detected rectangle is out of the bound of the image. To remedy this, you may pad the image a little bit and do the crop after that. There is an example illustrated in this answer.

Now, we compare the two methods to crop the rotated rectangle from the image.

This method do not require rotating the image and can deal with this problem more elegantly with less code.