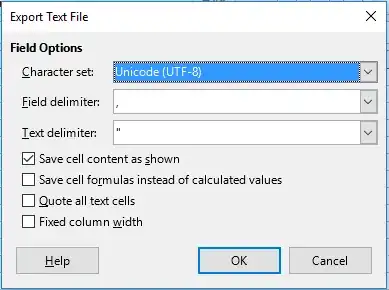

I have an Excel file that has some Spanish characters (tildes, etc.) that I need to convert to a CSV file to use as an import file. However, when I do Save As CSV it mangles the "special" Spanish characters that aren't ASCII characters. It also seems to do this with the left and right quotes and long dashes that appear to be coming from the original user creating the Excel file in Mac.

Since CSV is just a text file I'm sure it can handle a UTF8 encoding, so I'm guessing it is an Excel limitation, but I'm looking for a way to get from Excel to CSV and keep the non-ASCII characters intact.