The answer is: depends on problem at hand. For your case of one-step prediction - yes, you can, but you don't have to. But whether you do or not will significantly impact learning.

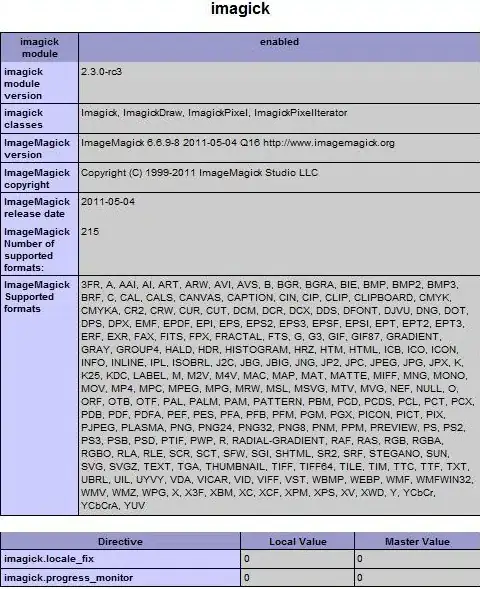

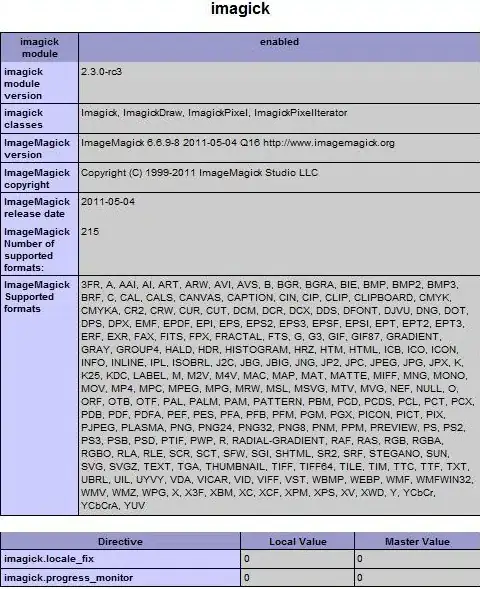

Batch vs. sample mechanism ("see AI" = see "additional info" section)

All models treat samples as independent examples; a batch of 32 samples is like feeding 1 sample at a time, 32 times (with differences - see AI). From model's perspective, data is split into the batch dimension, batch_shape[0], and the features dimensions, batch_shape[1:] - the two "don't talk." The only relation between the two is via the gradient (see AI).

Overlap vs no-overlap batch

Perhaps the best approach to understand it is information-based. I'll begin with timeseries binary classification, then tie it to prediction: suppose you have 10-minute EEG recordings, 240000 timesteps each. Task: seizure or non-seizure?

- As 240k is too much for an RNN to handle, we use CNN for dimensionality reduction

- We have the option to use "sliding windows" - i.e. feed a subsegment at a time; let's use 54k

Take 10 samples, shape (240000, 1). How to feed?

(10, 54000, 1), all samples included, slicing as sample[0:54000]; sample[54000:108000] ...(10, 54000, 1), all samples included, slicing as sample[0:54000]; sample[1:54001] ...

Which of the two above do you take? If (2), your neural net will never confuse a seizure for a non-seizure for those 10 samples. But it'll also be clueless about any other sample. I.e., it will massively overfit, because the information it sees per iteration barely differs (1/54000 = 0.0019%) - so you're basically feeding it the same batch several times in a row. Now suppose (3):

(10, 54000, 1), all samples included, slicing as sample[0:54000]; sample[24000:81000] ...

A lot more reasonable; now our windows have a 50% overlap, rather than 99.998%.

Prediction: overlap bad?

If you are doing a one-step prediction, the information landscape is now changed:

- Chances are, your sequence length is faaar from 240000, so overlaps of any kind don't suffer the "same batch several times" effect

- Prediction fundamentally differs from classification in that, the labels (next timestep) differ for every subsample you feed; classification uses one for the entire sequence

This dramatically changes your loss function, and what is 'good practice' for minimizing it:

- A predictor must be robust to its initial sample, especially for LSTM - so we train for every such "start" by sliding the sequence as you have shown

- Since labels differ timestep-to-timestep, the loss function changes substantially timestep-to-timestep, so risks of overfitting are far less

What should I do?

First, make sure you understand this entire post, as nothing here's really "optional." Then, here's the key about overlap vs no-overlap, per batch:

- One sample shifted: model learns to better predict one step ahead for each starting step - meaning: (1) LSTM's robust against initial cell state; (2) LSTM predicts well for any step ahead given X steps behind

- Many samples, shifted in later batch: model less likely to 'memorize' train set and overfit

Your goal: balance the two; 1's main edge over 2 is:

- 2 can handicap the model by making it forget seen samples

- 1 allows model to extract better quality features by examining the sample over several starts and ends (labels), and averaging the gradient accordingly

Should I ever use (2) in prediction?

- If your sequence lengths are very long and you can afford to "slide window" w/ ~50% its length, maybe, but depends on the nature of data: signals (EEG)? Yes. Stocks, weather? Doubt it.

- Many-to-many prediction; more common to see (2), in large per longer sequences.

LSTM stateful: may actually be entirely useless for your problem.

Stateful is used when LSTM can't process the entire sequence at once, so it's "split up" - or when different gradients are desired from backpropagation. With former, the idea is - LSTM considers former sequence in its assessment of latter:

t0=seq[0:50]; t1=seq[50:100] makes sense; t0 logically leads to t1seq[0:50] --> seq[1:51] makes no sense; t1 doesn't causally derive from t0

In other words: do not overlap in stateful in separate batches. Same batch is OK, as again, independence - no "state" between the samples.

When to use stateful: when LSTM benefits from considering previous batch in its assessment of the next. This can include one-step predictions, but only if you can't feed the entire seq at once:

- Desired: 100 timesteps. Can do: 50. So we set up

t0, t1 as in above's first bullet.

- Problem: not straightforward to implement programmatically. You'll need to find a way to feed to LSTM while not applying gradients - e.g. freezing weights or setting

lr = 0.

When and how does LSTM "pass states" in stateful?

- When: only batch-to-batch; samples are entirely independent

- How: in Keras, only batch-sample to batch-sample:

stateful=True requires you to specify batch_shape instead of input_shape - because, Keras builds batch_size separate states of the LSTM at compiling

Per above, you cannot do this:

# sampleNM = sample N at timestep(s) M

batch1 = [sample10, sample20, sample30, sample40]

batch2 = [sample21, sample41, sample11, sample31]

This implies 21 causally follows 10 - and will wreck training. Instead do:

batch1 = [sample10, sample20, sample30, sample40]

batch2 = [sample11, sample21, sample31, sample41]

Batch vs. sample: additional info

A "batch" is a set of samples - 1 or greater (assume always latter for this answer)

. Three approaches to iterate over data: Batch Gradient Descent (entire dataset at once), Stochastic GD (one sample at a time), and Minibatch GD (in-between). (In practice, however, we call the last SGD also and only distinguish vs BGD - assume it so for this answer.) Differences:

- SGD never actually optimizes the train set's loss function - only its 'approximations'; every batch is a subset of the entire dataset, and the gradients computed only pertain to minimizing loss of that batch. The greater the batch size, the better its loss function resembles that of the train set.

- Above can extend to fitting batch vs. sample: a sample is an approximation of the batch - or, a poorer approximation of the dataset

- First fitting 16 samples and then 16 more is not the same as fitting 32 at once - since weights are updated in-between, so model outputs for the latter half will change

- The main reason for picking SGD over BGD is not, in fact, computational limitations - but that it's superior, most of the time. Explained simply: a lot easier to overfit with BGD, and SGD converges to better solutions on test data by exploring a more diverse loss space.

BONUS DIAGRAMS: