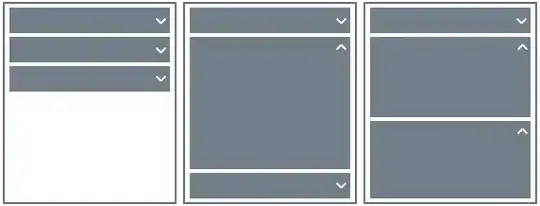

I have a simple 2-dimensional dataset that I wish to cluster in an agglomerative manner (not knowing the optimal number of clusters to use). The only way I've been able to cluster my data successfully is by giving the function a 'maxclust' value.

For simplicity's sake, let's say this is my dataset:

X=[ 1,1;

1,2;

2,2;

2,1;

5,4;

5,5;

6,5;

6,4 ];

Naturally, I would want this data to form 2 clusters. I understand that if I knew this, I could just say:

T = clusterdata(X,'maxclust',2);

and to find which points fall into each cluster I could say:

cluster_1 = X(T==1, :);

and

cluster_2 = X(T==2, :);

but without knowing that 2 clusters would be optimal for this dataset, how do I cluster these data?

Thanks