Note:

This answer shows how to switch the character encoding in the Windows console to

(BOM-less) UTF-8 (code page 65001), so that shells such as cmd.exe and PowerShell properly encode and decode characters (text) when communicating with external (console) programs with full Unicode support, and in cmd.exe also for file I/O.[1]

If, by contrast, your concern is about the separate aspect of the limitations of Unicode character rendering in console windows, see the middle and bottom sections of this answer, where alternative console (terminal) applications are discussed too.

Does Microsoft provide an improved / complete alternative to chcp 65001 that can be saved permanently without manual alteration of the Registry?

As of (at least) Windows 10, version 1903, you have the option to set the system locale (language for non-Unicode programs) to UTF-8, but the feature is still in beta as of this writing and has far-reaching consequences.

To activate it:

- Run

intl.cpl (which opens the regional settings in Control Panel)

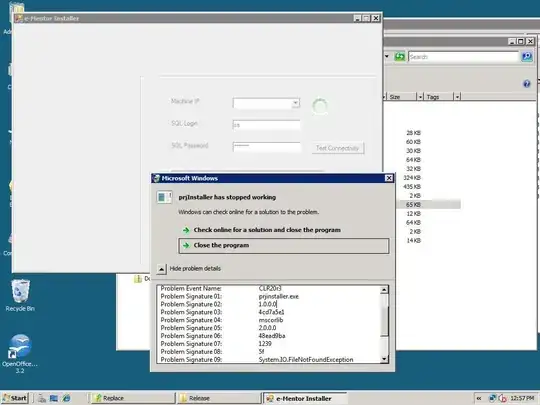

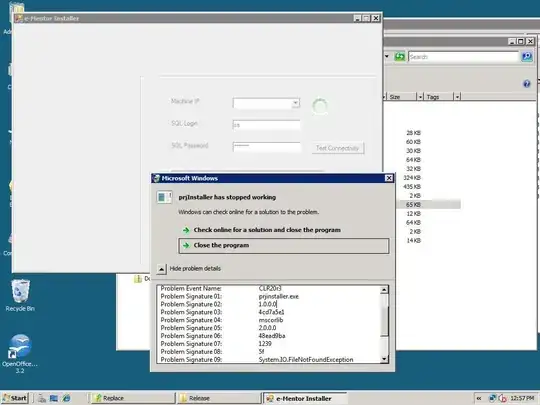

- Follow the instructions in the screen shot below.

This sets both the system's active OEM and the ANSI code page to 65001, the UTF-8 code page, which therefore (a) makes all future console windows, which use the OEM code page, default to UTF-8 (as if chcp 65001 had been executed in a cmd.exe window) and (b) also makes legacy, non-Unicode GUI-subsystem applications, which (among others) use the ANSI code page, use UTF-8.

Caveats:

If you're using Windows PowerShell, this will also make Get-Content and Set-Content and other contexts where Windows PowerShell default so the system's active ANSI code page, notably reading source code from BOM-less files, default to UTF-8 (which PowerShell Core (v6+) always does). This means that, in the absence of an -Encoding argument, BOM-less files that are ANSI-encoded (which is historically common) will then be misread, and files created with Set-Content will be UTF-8 rather than ANSI-encoded.

- Similarly, legacy (non-Unicode) non-console applications will then misinterpret ANSI-encoded files.

Pick a TT (TrueType) font, but even they usually support only a subset of all characters, so you may have to experiment with specific fonts to see if all characters you care about are represented - see this answer for details, which also discusses alternative console (terminal) applications that have better Unicode rendering support.

As eryksun points out, legacy console applications that do not "speak" UTF-8 will be limited to ASCII-only input and will produce incorrect output when trying to output characters outside the (7-bit) ASCII range. (In the obsolescent Windows 7 and below, programs may even crash).

If running legacy console applications is important to you, see eryksun's recommendations in the comments.

However, for Windows PowerShell, that is not enough:

- You must additionally set the

$OutputEncoding preference variable to UTF-8 as well: $OutputEncoding = [System.Text.UTF8Encoding]::new()[2]; it's simplest to add that command to your $PROFILE (current user only) or $PROFILE.AllUsersCurrentHost (all users) file.

- Fortunately, this is no longer necessary in PowerShell Core, which internally consistently defaults to BOM-less UTF-8.

If setting the system locale to UTF-8 is not an option in your environment, use startup commands instead:

Note: The caveat re legacy console applications mentioned above equally applies here. If running legacy console applications is important to you, see eryksun's recommendations in the comments.

For PowerShell (both editions), add the following line to your $PROFILE (current user only) or $PROFILE.AllUsersCurrentHost (all users) file, which is the equivalent of chcp 65001, supplemented with setting preference variable $OutputEncoding to instruct PowerShell to send data to external programs via the pipeline in UTF-8:

- Note that running

chcp 65001 from inside a PowerShell session is not effective, because .NET caches the console's output encoding on startup and is unaware of later changes made with chcp; additionally, as stated, Windows PowerShell requires $OutputEncoding to be set - see this answer for details.

$OutputEncoding = [console]::InputEncoding = [console]::OutputEncoding = New-Object System.Text.UTF8Encoding

- For example, here's a quick-and-dirty approach to add this line to

$PROFILE programmatically:

'$OutputEncoding = [console]::InputEncoding = [console]::OutputEncoding = New-Object System.Text.UTF8Encoding' + [Environment]::Newline + (Get-Content -Raw $PROFILE -ErrorAction SilentlyContinue) | Set-Content -Encoding utf8 $PROFILE

For cmd.exe, define an auto-run command via the registry, in value AutoRun of key HKEY_CURRENT_USER\Software\Microsoft\Command Processor (current user only) or HKEY_LOCAL_MACHINE\Software\Microsoft\Command Processor (all users):

- For instance, you can use PowerShell to create this value for you:

# Auto-execute `chcp 65001` whenever the current user opens a `cmd.exe` console

# window (including when running a batch file):

Set-ItemProperty 'HKCU:\Software\Microsoft\Command Processor' AutoRun 'chcp 65001 >NUL'

Optional reading: Why the Windows PowerShell ISE is a poor choice:

While the ISE does have better Unicode rendering support than the console, it is generally a poor choice:

First and foremost, the ISE is obsolescent: it doesn't support PowerShell (Core) 7+, where all future development will go, and it isn't cross-platform, unlike the new premier IDE for both PowerShell editions, Visual Studio Code, which already speaks UTF-8 by default for PowerShell Core and can be configured to do so for Windows PowerShell.

The ISE is generally an environment for developing scripts, not for running them in production (if you're writing scripts (also) for others, you should assume that they'll be run in the console); notably, with respect to running code, the ISE's behavior is not the same as that of a regular console:

Poor support for running external programs, not only due to lack of supporting interactive ones (see next point), but also with respect to:

character encoding: the ISE mistakenly assumes that external programs use the ANSI code page by default, when in reality it is the OEM code page. E.g., by default this simple command, which tries to simply pass a string echoed from cmd.exe through, malfunctions (see below for a fix):

cmd /c echo hü | Write-Output

Inappropriate rendering of stderr output as PowerShell errors: see this answer.

The ISE dot-sources script-file invocations instead of running them in a child scope (the latter is what happens in a regular console window); that is, repeated invocations run in the very same scope. This can lead to subtle bugs, where definitions left behind by a previous run can affect subsequent ones.

As eryksun points out, the ISE doesn't support running interactive external console programs, namely those that require user input:

The problem is that it hides the console and redirects the process output (but not input) to a pipe. Most console applications switch to full buffering when a file is a pipe. Also, interactive applications require reading from stdin, which isn't possible from a hidden console window. (It can be unhidden via ShowWindow, but a separate window for input is clunky.)

[1] In PowerShell, if you never call external programs, you needn't worry about the system locale (active code pages): PowerShell-native commands and .NET calls always communicate via UTF-16 strings (native .NET strings) and on file I/O apply default encodings that are independent of the system locale. Similarly, because the Unicode versions of the Windows API functions are used to print to and read from the console, non-ASCII characters always print correctly (within the rendering limitations of the console).

In cmd.exe, by contrast, the system locale matters for file I/O (with < and > redirections, but notably including what encoding to assume for batch-file source code), not just for communicating with external programs in-memory (such as when reading program output in a for /f loop).

[2] In PowerShell v4-, where the static ::new() method isn't available, use $OutputEncoding = (New-Object System.Text.UTF8Encoding).psobject.BaseObject. See GitHub issue #5763 for why the .psobject.BaseObject part is needed.